Stop Guessing, Start Testing: Why A/B Testing Matters

Ever launched a campaign you thought was perfect, only to watch it flop? A/B testing prevents this pain.

A/B testing is defined as a marketing experiment where you compare two versions on a campaign to see which is more successful. This testing may involve changing a headline, thumbnail or even the background color in an ad. By isolating one variable, you can measure its direct impact.

This method is not new, as statistician Ronald Fisher established its principles a century ago, applying experiments to agriculture. As Amy Gallo explains, the same concepts migrated to clinical trials in medicine and now to digital campaigns. It is one of the most common ways marketers make evidence-based decisions.

In creator-led content, subtle design or messaging shifts can completely change performance. Brands often direct the creator to film the main video along with one variation. This usually involves a different opening line or CTA. For example:

Version A: “Who else wants calm mornings without coffee crashes?”

Version B: “If you’re like me, morning can feel like complete chaos until that first sip.”

Without testing, the brand might have launched the weaker version and wasted ad spend.

WHAT CAN A/B TESTING ACTUALLY DO FOR MARKETERS?

A/B testing isn’t just about picking the better version, it reveals how your audience interacts with your campaigns. Some goals A/B testing supports includes:

Increased Website Traffic: Attracting more clicks by testing titles and headlines.

Higher Conversion Rate: Getting more leads/ sign-ups by optimizing CTA text/ colors.

Lower Bounce Rates: Keep visitors engaged by experimenting with layouts and images.

Reduced Cart Abandonment: Keep customers from leaving before purchase.

COMMON PITFALLS IN A/B TESTING

Anthony Miyazaki, Brand Strategist and Marketing Educator speaks on the common problems and issues to consider when testing. I’ll highlight some I’ve seen first hand:

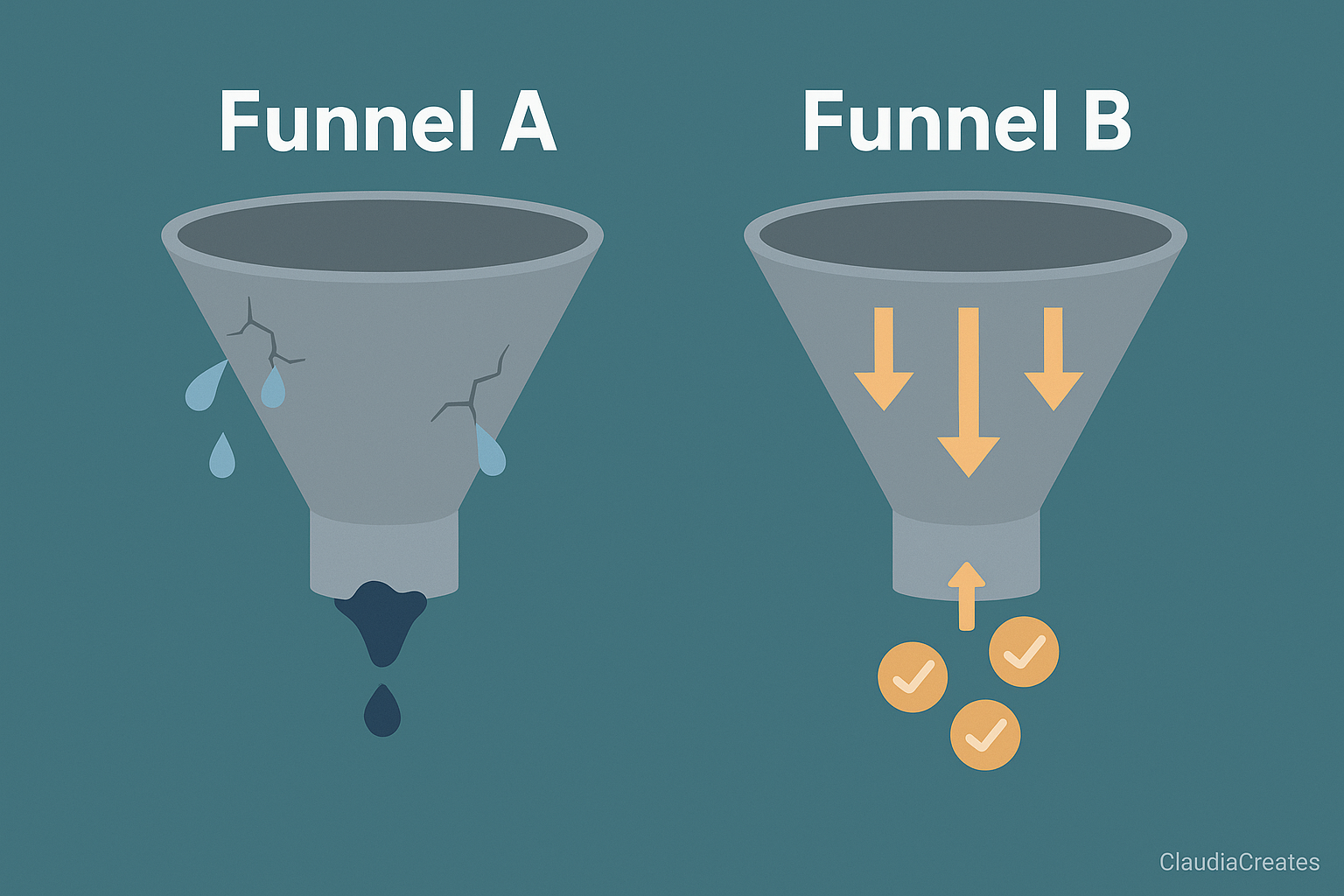

Top-of-funnel bias: Chasing clicks can pour the wrong people into your funnel, driving up service costs and returns.

Solution: Evaluate variants on multiple metrics (CTR and actions like add-to-cart)

One-off spikes (news blip or influencer tweet) can make a variant look like a winner.

Solution: Re-run the test and require the variant to win again under normal conditions

Ending tests too quickly: Run across multiple cycles or multiple replications. (7-14 days)

Generalizability: One win does not equal a win across all audiences or platforms. Solution: Re-test with new segments (Gen-Z vs Millennials or TikTok vs IG)

A/B testing is how marketers let the audience choose the winning story.

I encourage brands to view A/B testing as an ongoing conversation with their audience. Test your content, learn from the data and allow your audience to guide the story.